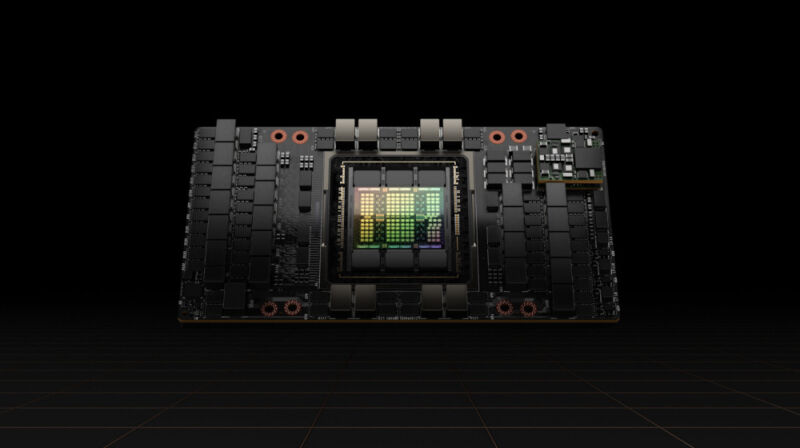

Enlarge / A press photo of the Nvidia H100 Tensor Core GPU. (credit: Nvidia)

The US acted aggressively last year to limit China’s ability to develop artificial intelligence for military purposes, blocking the sale there of the most advanced US chips used to train AI systems.

Big advances in the chips used to develop generative AI have meant that the latest US technology on sale in China is more powerful than anything available before. That is despite the fact that the chips have been deliberately hobbled for the Chinese market to limit their capabilities, making them less effective than products available elsewhere in the world.

The result has been soaring Chinese orders for the latest advanced US processors. China’s leading Internet companies have placed orders for $5 billion worth of chips from Nvidia, whose graphical processing units have become the workhorse for training large AI models.

Read 24 remaining paragraphs | Comments

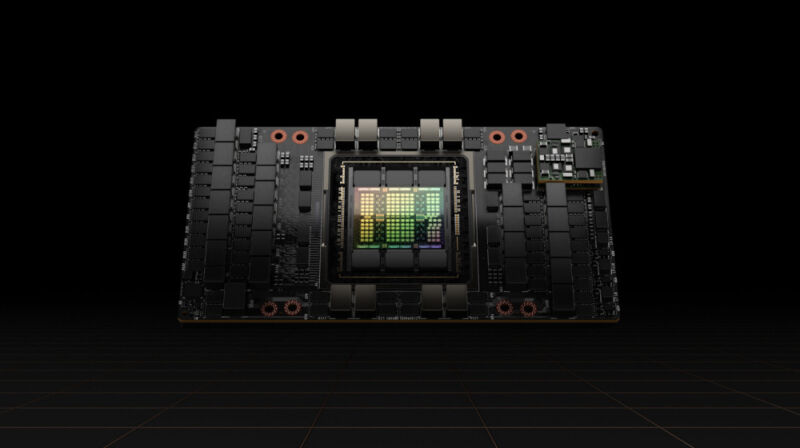

Enlarge / A press photo of the Nvidia H100 Tensor Core GPU. (credit: Nvidia)

The US acted aggressively last year to limit China’s ability to develop artificial intelligence for military purposes, blocking the sale there of the most advanced US chips used to train AI systems.

Big advances in the chips used to develop generative AI have meant that the latest US technology on sale in China is more powerful than anything available before. That is despite the fact that the chips have been deliberately hobbled for the Chinese market to limit their capabilities, making them less effective than products available elsewhere in the world.

The result has been soaring Chinese orders for the latest advanced US processors. China’s leading Internet companies have placed orders for $5 billion worth of chips from Nvidia, whose graphical processing units have become the workhorse for training large AI models.

Read 24 remaining paragraphs | Comments

August 21, 2023 at 11:28PM

Post a Comment